微调最早是在图片领域用的更多, NLP虽然来的晚,但是后来居上. 不过微调的思路和一些tricks其实也没有什么区别.

Fine-tuning a pretrained model – Deep Learning with Python 2nd Edition

Another widely used technique for model reuse, complementary to feature extraction, is fine-tuning (see figure 8.15). Fine-tuning consists of unfreezing a few of the top layers of a frozen model base used for feature extraction, and jointly training both the newly added part of the model (in this case, the fully connected classifier) and these top layers. This is called fine-tuning because it slightly adjusts the more abstract representations of the model being reused in order to make them more relevant for the problem at hand.

I stated earlier that it’s necessary to freeze the convolution base of VGG16 in order to be able to train a randomly initialized classifier on top. For the same reason, it’s only possible to fine-tune the top layers of the convolutional base once the classifier on top has already been trained. If the classifier isn’t already trained, the error signal propagating through the network during training will be too large, and the representations previously learned by the layers being fine-tuned will be destroyed. Thus the steps for fine-tuning a network are as follows:

- Add our custom network on top of an already-trained base network.

- Freeze the base network.

- Train the part we added.

- Unfreeze some layers in the base network.(Note that you should not unfreeze “batch normalization” layers, which are not relevant here since there are no such layers in VGG16. Batch normalization and its impact on fine- tuning is explained in the next chapter.)

- Jointly train both these layers and the part we added.

You already completed the first three steps when doing feature extraction. Let’s proceed with step 4: we’ll unfreeze our conv_base and then freeze indi- vidual layers inside it.

Why not fine-tune more layers?

Why not fine-tune the entire convolutional base?

You could. But you need to consider the following:

- Earlier layers in the convolutional base encode more generic, reusable features, whereas layers higher up encode more specialized features. It’s more useful to fine-tune the more specialized features, because these are the ones that need to be repurposed on your new problem. There would be fast-decreasing returns in fine-tuning lower layers.

- The more parameters you’re training, the more you’re at risk of overfitting. The convolutional base has 15 million parameters, so it would be risky to attempt to train it on your small dataset.

Thus, in this situation, it’s a good strategy to fine-tune only the top two or three layers in the convolutional base. Let’s set this up, starting from where we left off in the previ- ous example.

Dive into Deep Learning

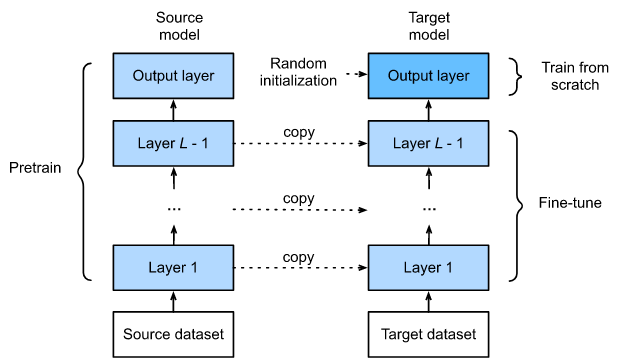

As shown in Fig. 14.2.1, fine-tuning consists of the following four steps:

- Pretrain a neural network model, i.e., the source model, on a source dataset (e.g., the ImageNet dataset).

- Create a new neural network model, i.e., the target model. This copies all model designs and their parameters on the source model except the output layer. We assume that these model parameters contain the knowledge learned from the source dataset and this knowledge will also be applicable to the target dataset. We also assume that the output layer of the source model is closely related to the labels of the source dataset; thus it is not used in the target model.

- Add an output layer to the target model, whose number of outputs is the number of categories in the target dataset. Then randomly initialize the model parameters of this layer.

- Train the target model on the target dataset, such as a chair dataset. The output layer will be trained from scratch, while the parameters of all the other layers are fine-tuned based on the parameters of the source model.

When target datasets are much smaller than source datasets, fine-tuning helps to improve models’ generalization ability.

# If `param_group=True`, the model parameters in the output layer will be

# updated using a learning rate ten times greater

def train_fine_tuning(net, learning_rate, batch_size=128, num_epochs=5,

param_group=True):

train_iter = torch.utils.data.DataLoader(torchvision.datasets.ImageFolder(

os.path.join(data_dir, 'train'), transform=train_augs),

batch_size=batch_size, shuffle=True)

test_iter = torch.utils.data.DataLoader(torchvision.datasets.ImageFolder(

os.path.join(data_dir, 'test'), transform=test_augs),

batch_size=batch_size)

devices = d2l.try_all_gpus()

loss = nn.CrossEntropyLoss(reduction="none")

if param_group:

params_1x = [param for name, param in net.named_parameters()

if name not in ["fc.weight", "fc.bias"]]

trainer = torch.optim.SGD([{'params': params_1x}, //Here is the learning rate strategy.

{'params': net.fc.parameters(),

'lr': learning_rate * 10}],

lr=learning_rate, weight_decay=0.001)

else:

trainer = torch.optim.SGD(net.parameters(), lr=learning_rate,

weight_decay=0.001)

d2l.train_ch13(net, train_iter, test_iter, loss, trainer, num_epochs,

devices)We set the base learning rate to a small value in order to fine-tune the model parameters obtained via pretraining. Based on the previous settings, we will train the output layer parameters of the target model from scratch using a learning rate ten times greater.

Generally, fine-tuning parameters uses a smaller learning rate, while training the output layer from scratch can use a larger learning rate.